WRS: In my lectures and writing, I often tell health care and fitness industry practitioners to seek out the scientific source articles when it comes to the latest greatest medical breakthroughs trumpeted across news headlines. But even with the articles in hand, what do the numbers mean? For some, so-called “statistical significance” is a shining stamp of approval. But you absolutely, positively MUST put the findings into context and, even if the results are “significant,” what do they mean in real terms? For example, many years ago, I authored a claim by claim debunking of the dietary supplement pyruvate using the VERY same research articles that marketers were using to sell it! All I had to do was look at the experimental conditions, numbers of subjects, humans vs. animals, and the significant p values relative to the actual numbers. While they spouted off that the product could help you shed 48% more fat and 37% more weight, the ACTUAL difference between the supplement and placebo group were very small.

In this second installment from guest blogger Christian Thoma, MSc, PhD Candidate, he discusses the meaning of the word “significant” and what p-values really mean in practical terms. Over to you, mate!

Introduction: Is It Significant?

Everyone from scientists through to the interested public often misunderstands significance as it applies to research findings. Despite over eight years in research, I've only recently developed a true appreciation for the importance of good statistical methods. I can appreciate why it makes some people's eyes roll, so this post isn't about explaining p-values or confidence intervals. However, I do highly recommend that you read the Wikipedia entry for p-value - subheading: Frequent misunderstandings.

“Significant” in Practical vs. Scientific Terms

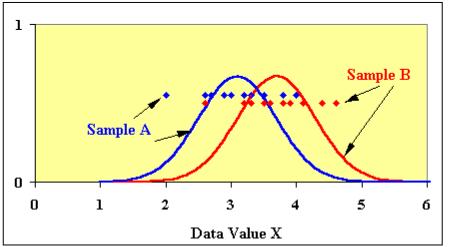

In common language, to say something is significant can mean it is important or substantial. In scientific writing, it usually relates to a statistical measure trying to determine if two sets of numbers - results of some study - are genuinely different or only look different because of normal variation independent of the study itself. For example, if we put 20 people on a strength training program and another 20 on cycle-based interval program, looked at their cholesterol after three months, and found that the average changes were different in each group, we would want to know if this difference was likely to be 'real' or just a chance finding (i.e. due to cholesterol varying day-to-day). Using this meaning of significant (i.e. statistically significant), a significant finding is one in which we have good confidence that an observed difference was real.

Consistency vs. Variation

At this point we need to look at the actual findings. In most cases not everyone in a group is going to experience the same change. Some may get a big drop, some a small drop, and some a small rise. If that's the case, the average change would need to be quite big for the statistical tests to show us that it's statistically significant. If on the other hand everyone in the group had a small drop, the average change could be quite small and we would still get a significant result. It's a case of consistency vs. variation.

Statistical Blender vs. Real World Meaning

Let's say we get a 'probably' real difference. We still need to ask - is it significant in the common tongue? Was it substantial and/or important? The answer depends on your perspective and on which of the two scenarios above apply. As a researcher you would be happy to have found something be it big or small. But let's say the second scenario applies where we are fairly confident that there was a drop, but the drop was consistently small. In such a case, you as a patient, clinician, or trainer might not care because there are other ways of getting a much bigger drop. How you thought about it would also depend on whether a small drop was important in your context. Had the study been about a supplement causing fat reduction, but only by a very small amount, you might think it useful for dropping the last 1-2% body-fat for a physique contest, but not for helping a client drop 10 kg (22 lbs).

Clinical vs. Public Health Significance

The above example is often called clinical significance, but it applies outside the clinic. If you work one-on-one or use research to inform your own lifestyle, you benefit the most by first confirming statistical significance and then applying your own criteria to clinical significance.

There is another kind I like to call public health significance. It's important because the rules are different when you apply a small change to a very large group. Let's say there was a cheap, safe, and easy way to lower people's blood pressure by 1-2 mmHg, a way that could be implemented so that a large portion of the population benefited. Chances are public health people and health economists would be pushing it. Why, given that no doctor treating individual patients would care about such a small change? Because by shifting the average of a large group a little, it will also shift a lot of people from a higher risk category to a lower one. Here it's the size of the group being affected and not the size of the individual effect that matters. This last example should help explain why some very modest findings, in the right context, stir up excitement.

Take Home Messages

If you're interested in the results of research, check for statistical significance, but then go further. Know that even a highly statistically significant result doesn't mean the result was substantial or important as this is context specific. Be clear on the relevant context for you, or your client/patient. Understand that in a different context, the findings may have a different importance or relevance.

In this second installment from guest blogger Christian Thoma, MSc, PhD Candidate, he discusses the meaning of the word “significant” and what p-values really mean in practical terms. Over to you, mate!

Introduction: Is It Significant?

Everyone from scientists through to the interested public often misunderstands significance as it applies to research findings. Despite over eight years in research, I've only recently developed a true appreciation for the importance of good statistical methods. I can appreciate why it makes some people's eyes roll, so this post isn't about explaining p-values or confidence intervals. However, I do highly recommend that you read the Wikipedia entry for p-value - subheading: Frequent misunderstandings.

“Significant” in Practical vs. Scientific Terms

In common language, to say something is significant can mean it is important or substantial. In scientific writing, it usually relates to a statistical measure trying to determine if two sets of numbers - results of some study - are genuinely different or only look different because of normal variation independent of the study itself. For example, if we put 20 people on a strength training program and another 20 on cycle-based interval program, looked at their cholesterol after three months, and found that the average changes were different in each group, we would want to know if this difference was likely to be 'real' or just a chance finding (i.e. due to cholesterol varying day-to-day). Using this meaning of significant (i.e. statistically significant), a significant finding is one in which we have good confidence that an observed difference was real.

Consistency vs. Variation

At this point we need to look at the actual findings. In most cases not everyone in a group is going to experience the same change. Some may get a big drop, some a small drop, and some a small rise. If that's the case, the average change would need to be quite big for the statistical tests to show us that it's statistically significant. If on the other hand everyone in the group had a small drop, the average change could be quite small and we would still get a significant result. It's a case of consistency vs. variation.

Statistical Blender vs. Real World Meaning

Let's say we get a 'probably' real difference. We still need to ask - is it significant in the common tongue? Was it substantial and/or important? The answer depends on your perspective and on which of the two scenarios above apply. As a researcher you would be happy to have found something be it big or small. But let's say the second scenario applies where we are fairly confident that there was a drop, but the drop was consistently small. In such a case, you as a patient, clinician, or trainer might not care because there are other ways of getting a much bigger drop. How you thought about it would also depend on whether a small drop was important in your context. Had the study been about a supplement causing fat reduction, but only by a very small amount, you might think it useful for dropping the last 1-2% body-fat for a physique contest, but not for helping a client drop 10 kg (22 lbs).

Clinical vs. Public Health Significance

The above example is often called clinical significance, but it applies outside the clinic. If you work one-on-one or use research to inform your own lifestyle, you benefit the most by first confirming statistical significance and then applying your own criteria to clinical significance.

There is another kind I like to call public health significance. It's important because the rules are different when you apply a small change to a very large group. Let's say there was a cheap, safe, and easy way to lower people's blood pressure by 1-2 mmHg, a way that could be implemented so that a large portion of the population benefited. Chances are public health people and health economists would be pushing it. Why, given that no doctor treating individual patients would care about such a small change? Because by shifting the average of a large group a little, it will also shift a lot of people from a higher risk category to a lower one. Here it's the size of the group being affected and not the size of the individual effect that matters. This last example should help explain why some very modest findings, in the right context, stir up excitement.

Take Home Messages

If you're interested in the results of research, check for statistical significance, but then go further. Know that even a highly statistically significant result doesn't mean the result was substantial or important as this is context specific. Be clear on the relevant context for you, or your client/patient. Understand that in a different context, the findings may have a different importance or relevance.

0 comments:

Post a Comment